Cloud:

The most important part to understand first is that we're in an era where the public cloud has allowed us to experiment with many new services simply because the cloud has everything already deployed as a managed service, this means that customers can test new functionality at a fraction of a cost compared to the traditional infrastructure where you'd have to build and deploy everything yourself. And this allows for quicker adoption of new technology.

Containers:

For example, let's say I wanted to test some cloud-native apps and some scaling functionality. How can I do that? Well, I can easily package my app in a container and run that container in the cloud using some type of orchestrator platform. In the case of AWS, we have EKS (Elastic Kubernetes Service) and ECS (Elastic Container Service) which we can leverage for our containers. If you need/want Kubernetes, then go with EKS, if you don't care about Kubernetes, you also have the option of ECS.

Why would I run my workload as containers? Containers are a lot more portable because they are much smaller in size when compared to VMs, it is quite easy to scale a container cluster, and the costs of running containers are more cost-effective than using virtual machines since containers use fewer resources. There are a few other points to mention, in that containers allow us to use different technology stacks for different microservices. A microservices-oriented architecture splits up a lot of the services that make up a single monolithic app into smaller parts and allows us to use different programming languages to build out these individual microservices, this means that you can have a team dedicated to microservice A and a team dedicated to microservice B and use completely different programming languages, why? because each programming language is suited to solve different problems. You cant use Python for every project for example, but it does have its use cases.

This also means that you need to have a team with the expertise to manage these containers, so there are tradeoffs that each organization has to look at and make the decision that is best for them.

Infrastructure As Code:

IaC is a big part of deploying and managing resources in the cloud. With the traditional approach, where most tasks are deployed manually, this does not translate well into the Cloud era, where the cloud is predominantly API based and every communication between services is API based. With IaC tools like Terraform, we can take advantage of the API-based nature of the cloud and deploy/manage our resources through code.

An IaC tool is a tool used for creating configuration files or scripts that you can then use to automate the deployment of resources in the cloud. We can then share the code with other team members and these scripts also serve kind of like documentation where you can look and see what is deployed. IaC tools will also write code in a declarative fashion, as in you define the desired state and the tool will figure out how to deploy the infrastructure, compared to imperative tools that have you define every single step in a more granular way. You could argue that imperative is better due to the nature of having to describe everything yourself vs having an IaC tool handle that for you, but this also means that you need to be very well-versed in that language otherwise it is hard to learn.

One of the advantages of an IaC tool is that it should be easy to read the scripts and easy to pick up due to the declarative nature of the tool.

Configuration Management tools:

CM tools are quite popular as well, and they serve a different function than an IaC tool does. CM tools are used for configuring applications and operating systems vs deploying the infrastructure like the IaC tools. Of course, there is some overlap between the two. You can also use IaC with CM tools to create your deployment pipeline. An example of a CM tool would be something like Ansible from Red Hat.

Bash and PowerShell:

If you work with Operating Systems, like Linux and Windows, chances are you've seen some bash scripts and some PowerShell scripts. In the world of DevOps, you will be doing a lot of scripting with different scripting languages, it all depends on what OS you're using and your needs. From my experience, knowing at least one scripting language like Bash or PowerShell is important. I've spent many years in the Windows world so PowerShell to me is not new, but Bash is, so it is important to identify at least one scripting language that you can become good at.

With these scripting languages, we can perform automation tasks of the operating systems and deploy apps on these operating systems.

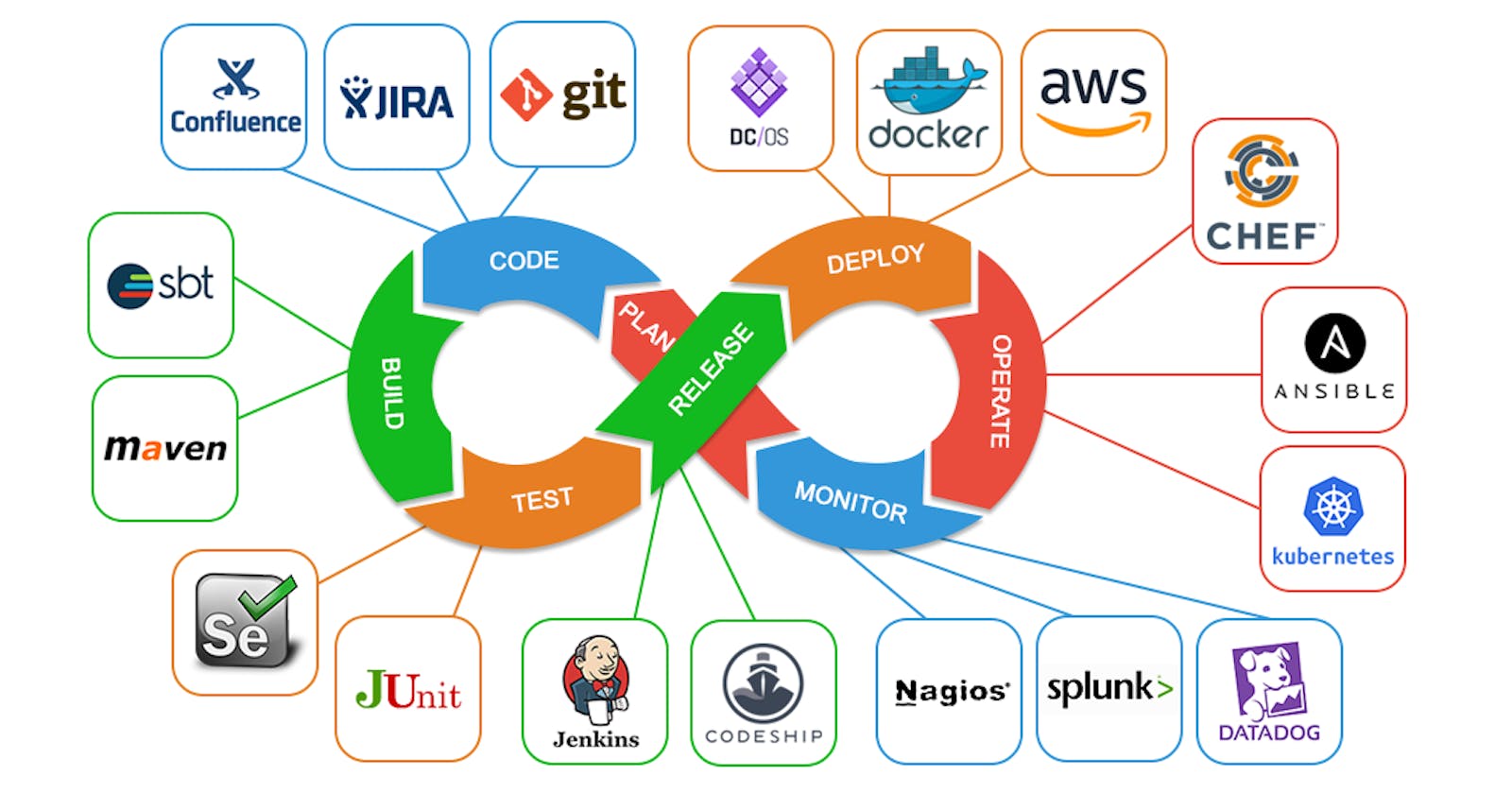

Continuous Integration / Continuous Deployment / Delivery. (CI/CD):

CI/CD is another concept that is important to understand. In DevOps, the way you deploy something, you usually have a pipeline/workflow you follow. The goal of CI/CD is to help with the deployment of new features in an automated fashion so that you can continuously introduce new features to an application.

If you think about cloud services today, you never hear about service going down due to engineers needing to add new features. Sure services do go down from time to time, but it is usually when someone makes a mistake. But in reality, a service should never be down to add new features.

Orchestrators:

Orchestrators are one of the core elements of Cloud Native Apps. When deploying containers, you have to choose how you want to manage and orchestrate these containers. Orchestrators essentially manage the life cycle of a cluster that runs containers. An example of orchestrators would be something like Kubernetes, Nomad, and Docker Swarm.

Versioning Control System (VCS):

VCS is a big part of the DevOps space, VCS is where you store all your configuration files that other members of the team can access. This is important in areas where you want to collaborate on projects with multiple engineers. You need a central store to which everyone has access.

Documentation:

Very important to keep documentation up to date. You always have to document your code which is typically done within the VCS and sometimes in the script itself.

Measure everything:

One of how you can gauge the impact you're having on the infrastructure is to look at where you can improve the process, deployment speeds, etc by measuring how your services are deployed, and looking at various metrics to see where you can improve, this is an iterative process so it this will always be part of the deployment/management of services.

Thank you for reading!

Hope you find this article helpful, and yes please do share your views in the comment session, I love hearing feedback on my blog..!

Don't forget to follow me on socials: